Main content

Top content

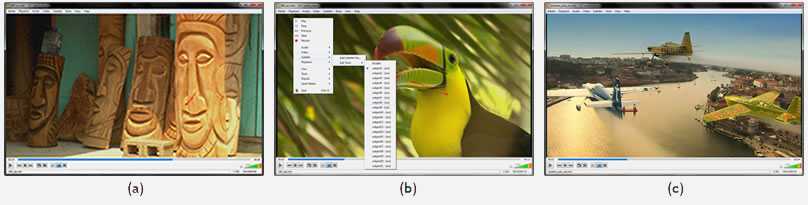

Instantaneous visualization of gaze data, here show as yellow dots: (a) visualization of gaze data on a certain frame with VLC player — (b) like normal subtitles one can easily change between the gaze data of subjects — (c) exemplary gaze data on another video sequence.

Metadata in Multimedia Containers for Instantaneous Visualizations and VA

With the rising amount of gaze behavior analysis, where participants get tracked watching video stimuli, the amount of corresponding gaze data records increases, too. Unfortunately, in most cases, these data are collected in separate files in custom-made or proprietary data formats. Thus these data are hard to access even for experts, and effectively inaccessible for non-experts. Expensive or custom-made software is necessary for the data analysis. By promoting the use of existing multimedia container formats for distributing and archiving eye tracking and gaze data bundled with the stimuli media, we define an exchange format that can be interpreted by standard multimedia players as well as streamed via the Internet. We converted several gaze data sets into our format, demonstrating the feasability of our approach and allowing to visualize these data with standard multimedia players. We also introduce two plugins for one of these players, allowing for further visual analytics. We discuss the benefit of gaze data in a multimedia containers and explain possible visual analytics approaches based on our implementations, converted datasets, and first user interviews.

Top content

Demonstration Videos and Datasets

Source Code

VLC 3.0.0 patch

Modified version of the subsusf.c. Necessary for the playback of the USF files.

USF to ASS translation

XSL file for USF to ASS translation usf2ass.xsl.

Eye tracking data to USF converter

Currently, the eye tracking data to USF converter is only available as command line implementation. A version with a user interface is planned to release in the upcoming months. If you want to get the source code of the command line converter nevertheless, please write an email to Julius Schöning.

VLC Visual Analytics Plugins

To install the extensions under Linux, please copy the SimSub.lua and/or MergeSub.lua into ~/.local/share/vlc/lua/extensions/ for the current user or /usr/lib/vlc/lua/extensions/ for all users.

SimSub: Visualization of different eye tracking datasets in multiple windows

MergeSub: Visualization of different eye tracking datasets in a single window

Converted Eye Tracking Data Sets with Instantaneous Visualizations

The following data sets are provided for research purposes. By using these data sets in the proposed multimedia container format, please cite [S], [S1], [S2], or [S3] and also the original dataset [A], [B], [C], [K], or [R].

- Real World Visual Processing [S3] - 1 video

- Açik et al. [A] dataset - 216 videos

- Sundberg et al. [B] dataset - 7 videos

- Coutrot & Guyader [C] dataset - 60 videos

- Kurzhals et al. [K] dataset - 11 videos

- Riche et al. [R] dataset - 24 videos

References

| [S] | J. Schöning, C. Gundler, G. Heidemann, P. König & U. Krumnack. Visual Analytics of Gaze Data with Standard Multimedia Players. Journal of Eye Movement Research, 10(5) : 1-14, 2017. | DOI | URL | BibTeX |

| [S1] | J. Schöning, P. Faion, G. Heidemann & U. Krumnack. Eye Tracking Data in Multimedia Containers for Instantaneous Visualizations. In IEEE VIS Workshop on Eye Tracking and Visualization (ETVIS), pages: 74-78, 2016. IEEE. | PDF | DOI | URL | BibTeX |

| [S2] | J. Schöning, P. Faion, G. Heidemann & U. Krumnack. Providing Video Annotations in Multimedia Containers for Visualization and Research. In IEEE Winter Conference on Applications of Computer Vision (WACV) 2017. IEEE. | PDF | DOI | URL | BibTeX |

| [S3] | J. Schöning, A.L. Gert, A. Açik, T.C. Kietzmann, G. Heidemann & P. König. Exploratory Multimodal Data Analysis with Standard Multimedia Player --- Multimedia Containers: a Feasible Solution to make Multimodal Research Data Accessible to the Broad Audience. In Proceedings of the 12th Joint Conference on Computer Vision, Imagingand Computer Graphics Theory and Applications (VISAPP), pages: 272-279, ISBN: 978-989-758-225-7, 2017. SCITEPRESS. | PDF | DOI | URL | BibTeX |

| [A] | A. Açik, A. Bartel & P. König. Real and implied motion at the center of gaze. Journal of Vision, 14(2) : 1-19, 2014. Association for Research in Vision and Ophthalmology (ARVO). | DOI | BibTeX |

| [B] | P. Sundberg, T. Brox, M. Maire, P. Arbelaez & J. Malik. Occlusion boundary detection and figure/ground assignment from optical flow. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages: 2233-2240, 2011. IEEE. | DOI | BibTeX |

| [C] | A. Coutrot & N. Guyader. How saliency, faces, and sound influence gaze in dynamic social scenes. Journal of Vision, 14(8) : 5-5, 2014. Association for Research in Vision and Ophthalmology (ARVO). | DOI | BibTeX |

| [K] | K. Kurzhals, C.F. Bopp, J. Bässler, F. Ebinger & D. Weiskopf. Benchmark data for evaluating visualization and analysis techniques for eye tracking for video stimuli. In ACM Workshop on Beyond Time and Errors: Novel Evaluation Methods for Visualization (BELIV), pages: 54-60, 2014. ACM Press. | DOI | BibTeX |

| [R] | N. Riche, M. Mancas, D. Culibrk, V. Crnojevic, B. Gosselin & T. Dutoit. Dynamic Saliency Models and Human Attention: A Comparative Study on Videos. Lecture Notes in Computer Science, pages: 586-598, ISBN: 978-3-642-37431-9, 2013. Springer Science + Business Media. | DOI | BibTeX |